Amazon VPC CNI vs Calico CNI vs Weave Net CNI on EKS

I have run into a road block in my AWS EKS cluster due to the Amazon VPC CNI. The blocking issues for me are that the CNI limits the number of pods that can be scheduled on each k8s node according to the number of IP Addresses available to each EC2 instance type so that each pod can be allocated an IP. The other problem with the Amazon VPC CNI is that it is eating up the number of IP Addresses available within my VPC (note: this can be overcome by using an alternate subnet).

Due to these pain points, I decided to start looking into alternate CNIs. I don’t want to sacrifice too much performance when choosing an alternate CNI and I want the installation process to be easy. There is a wonderful article on performance between the various popular Kubernetes CNIs here. Unfortunately, this comparison doesn’t offer any numbers on the Amazon VPC CNI. I decided to conduct my own simple benchmark to compare the Amazon VPC CNI vs Calico and Weave. Note that my comparisons are very simple and are intended to get a basic understanding of how the VPC CNI stacks up against the better comparisons already given on the before-mentioned benchmark article.

I conducted my benchmark by running a very simple gRPC ping/pong app written in Go. To accomplish this I have a client app that on startup will send a gRPC message to a server. After this initial startup message, each of the apps (the client and the server) are written to send a response message every time a message is received. This gives me a simple infinite loop that produces messages that will never be concurrently sent. No messages will ever be in transit as another message is in transit. If you are interested in seeing the code, check it out here.

I created 3 EKS clusters in order to execute my experiment on each CNI at the same time. Each cluster was created using the wonderful eksctl tool. I created each cluster with the --ssh-access=true flag so that I can ssh into the ec2 instance to verify CNI configuration.

Setting Up CNI

Amazon VPC CNI

Create the EKS cluster:

eksctl create cluster --name awsvpccnitest --ssh-access=trueThere is no additional configuration needed to set this CNI up after the EKS cluster has been created because it is the default CNI for EKS.

Calico CNI

Create the EKS cluster with 0 nodes so that the Amazon VPC CNI doesn’t get configured on any EC2 instances:

eksctl create cluster --name calicocnitest --ssh-access=true --nodes 0Delete the Amazon VPC CNI:

kubectl delete ds aws-node -n kube-system

Now install Calico by following the instructions here. Note that this method of installation is only valid for k8s clusters containing 50 or less nodes.

Now that Calico is installed we can turn on some EC2 instances.

# Get the node group name for the cluster

eksctl get nodegroups --cluster calicocnitest# Take the value in the NODEGROUP column and place it into this command to scale to 1 node

eksctl scale nodegroup --cluster calicocnitest --name <node group name> --nodes 1

Weave Net CNI

Create the EKS cluster with 0 nodes so that the Amazon VPC CNI doesn’t get configured on any EC2 instances:

eksctl create cluster --name weavenetcnitest --ssh-access=true --nodes 0Delete the Amazon VPC CNI:

kubectl delete ds aws-node -n kube-system

Now install Weave Net:

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"Now that Weave Net is installed we can turn on some EC2 instances.

# Get the node group name for the cluster

eksctl get nodegroups --cluster weavenetcnitest# Take the value in the NODEGROUP column and place it into this command to scale to 1 node

eksctl scale nodegroup --cluster weavenetcnitest --name <node group name> --nodes 1

Bootstrap the Cluster

Now that we have 3 running EKS clusters with 3 different CNIs, we will install the gRPC ping pong client/server as well as the prometheus operator to collect metrics. I chose to do this using Weave Flux. This makes installation real simple.

We will need to do the following for each of the 3 clusters.

First start by installing tiller into the cluster so that helm can work:

kubectl -n kube-system create sa tiller

kubectl create clusterrolebinding tiller-cluster-rule \

--clusterrole=cluster-admin \

--serviceaccount=kube-system:tiller

helm init --service-account tiller --waitNext we will install the apps using flux:

git clone https://github.com/jwenz723/flux-grpcdemo

cd flux-grpcdemo

./scripts/flux-init.sh git@github.com:jwenz723/flux-grpcdemoNote that you will need to create a fork of this repo and use your own git repo address if you are trying to follow along. You can find my project repo here.

At startup, Flux generates a SSH key and logs the public key. The above command will print the public key.

In order to sync the cluster state with git you need to copy the public key and create a deploy key with write access on your GitHub repository. On GitHub go to Settings > Deploy keys click on Add deploy key, check Allow write access, paste the Flux public key and click Add key.

When Flux has write access to your repository it will do the following:

- installs Prometheus Operator Helm Release

- installs grpcdemo-client Helm Release

- installs grpcdemo-server Helm Release

Visualizing the Data

With everything now running, I used grafana to visualize everything. I chose to run grafana on my own computer and connect to each of the prometheus instances in the 3 EKS clusters using kubectl port-forward. You can install Grafana by following the instructions here.

To port forward to each of the 3 prometheus instances I ran these commands:

kubectl config use-context eks-weavenetCNI

kubectl port-forward -n promop svc/prometheus-operated 9090:9090 &kubectl config use-context eks-calicoCNI

kubectl port-forward -n promop svc/prometheus-operated 9091:9090 &kubectl config use-context eks-awsvpcCNI

kubectl port-forward -n promop svc/prometheus-operated 9092:9090 &

Now I opened Grafana and created a dashboard with a single visualization that runs the prometheus query sum(rate(grpc_messages_sent[3m])) against each prometheus instance. This can be accomplished by setting the query type to --Mixed-- when creating the Grafana visualization. If you want to import my dashboard you can download the json here.

Requests/Sec Results

1 Node

When running the EKS clusters each with only 1 node, the Amazon VPC CNI was able to perform slightly better than the other 2 CNIs.

Average requests per second (includes both the number of messages sent by the grpc client and server) ranked by best first:

- Amazon VPC CNI: 10.9116k

- Weave Net CNI: 10.6719k

- Calico CNI: 10.5539k

I was surprised to see that Weave Net out performed Calico on this test. Here is a screenshot of the test showing 10 minutes of time:

2 Nodes

The first step to this test was to scale each of the clusters to have 2 nodes each. This is accomplished with the following commands:

# Scale AWS VPC EKS Cluster

eksctl get nodegroups --cluster awsvpccnitest# Replace <node group name> with NODEGROUP value from previous cmd

eksctl scale nodegroup --cluster awsvpccnitest --name <node group name> --nodes 2# Scale Calico EKS Cluster

eksctl get nodegroups --cluster calicocnitest# Replace <node group name> with NODEGROUP value from previous cmd

eksctl scale nodegroup --cluster calicocnitest --name <node group name> --nodes 2# Scale Weave Net EKS Cluster

eksctl get nodegroups --cluster weavenetcnitest# Replace <node group name> with NODEGROUP value from previous cmd

eksctl scale nodegroup --cluster weavenetcnitest --name <node group name> --nodes 2

Once both nodes are running, you can check which node the gRPC client/server end up on by running:

kubectl get pods -n grpcdemo -o yamlIf you’re lucky, you may have 1 pod already running on each node. In my case, I had to force which node each pod was deployed into. I did this by modifying the deployment of each. First I found which zone each of the nodes was in by running this command for each node:

kubectl get node <node name> -o yaml -- | grep failure-domain.beta.kubernetes.io/zoneNext I modified the server deployment:

kubectl edit deploy -n grpcdemo grpcdemo-serverI added the following (as documented here) into the deployment (us-west-2a and us-west-2b were the values returned by my command above for each node):

spec:

nodeSelector:

failure-domain.beta.kubernetes.io/zone: us-west-2aI did the same to the client but with a different zone value:

kubectl edit deploy -n grpcdemo grpcdemo-clientThen I added the following (as documented here):

spec:

nodeSelector:

failure-domain.beta.kubernetes.io/zone: us-west-2bNow verify that the editing was successful by checking that there are 2 pods in a ‘Running’ status and that the value for NODE is different for each pod:

$ get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEgrpcdemo-client-79bb44ddbb-jcdln 1/1 Running 0 8s 192.168.1.153 ip-192-168-1-14.us-west-2.compute.internal <none>grpcdemo-server-9fc5cd7d-k8vng 1/1 Running 0 42s 192.168.1.154 ip-192-168-1-154.us-west-2.compute.internal <none>

Now that the client and the server are forced to run on different nodes I was able to test networking performance across nodes.

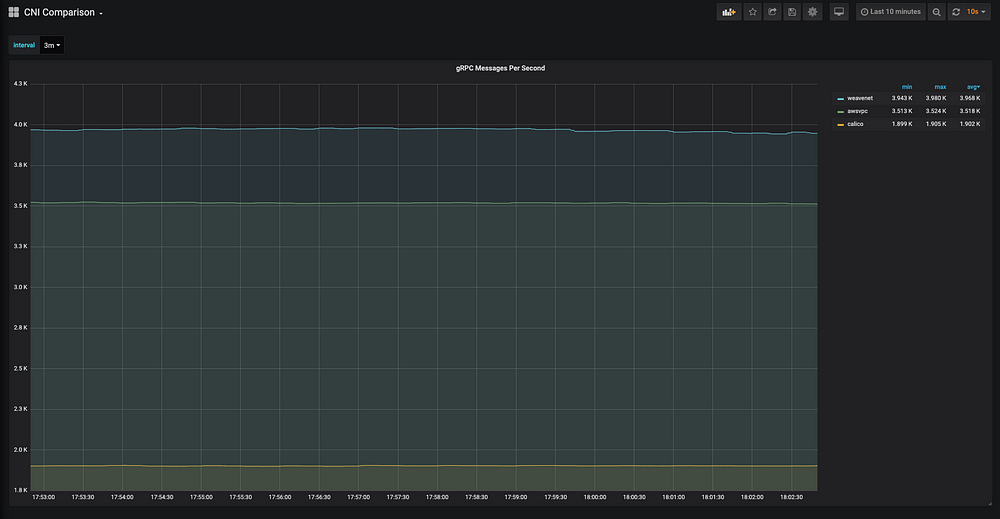

Here are the results:

Average requests per second (includes both the number of messages sent by the grpc client and server) ranked by best first:

- Weave Net CNI: 3.968k

- Amazon VPC CNI: 3.518k

- Calico CNI: 1.902k

According to the benchmarks that inspired me Calico seemed to be the fastest and Weave Net was on the slower end. I was, therefore, surprised to see that Weave Net was the most performant and Calico was the least performant in my test.

System Utilization Results

1 Node

CPU Utilization as cpu seconds used per second, not cpu % used (best to worst):

- Weave Net CNI: 0.00064

- Amazon VPC CNI: 0.00103

- Calico CNI: 0.01146

Memory Utilization (best to worst):

- Calico CNI: 31.7 MiB

- Amazon VPC CNI: 32.7 MiB

- Weave Net CNI: 102.9 MiB

2 Nodes

CPU Utilization as cpu seconds used per second, not cpu % used (best to worst):

- Weave Net CNI: 0.00162

- Amazon VPC CNI: 0.00184

- Calico CNI: 0.02404

Memory Utilization (best to worst):

- Calico CNI: 63.2 MiB

- Amazon VPC CNI: 65.1MiB

- Weave Net CNI: 202.0 MiB

Conclusion

I am in no way advocating that my results shown above are conclusive. From my very naive benchmarking I was able to conclude that the Amazon VPC CNI is at least comparable in performance to the other CNIs available on the market. I believe that any of the 3 CNIs compared here would be excellent options.